Discover how the Tülu 3 405B, the latest open-source post-training model from Allen AI, is revolutionizing the AI landscape. With its innovative Reinforcement Learning from Verifiable Rewards (RLVR) framework, Tülu 3 405B not only surpasses DeepSeek-V3 but also matches the performance of GPT-4o. Learn how this groundbreaking model scales to 405 billion parameters and outperforms other open-weight models like Llama 3.1, especially in complex tasks like MATH. Dive into the details of RLVR and its significant impact on large-scale AI performance.

1. Tülu 3 405B: A New Benchmark in Open-Source AI

- The Tülu 3 405B model represents a significant leap in open-source AI technology. As the final member of the Tülu 3 family, it showcases the power of the Reinforcement Learning from Verifiable Rewards (RLVR) framework. This model has been trained on a massive scale, with 405 billion parameters, making it one of the largest open-source models available today.

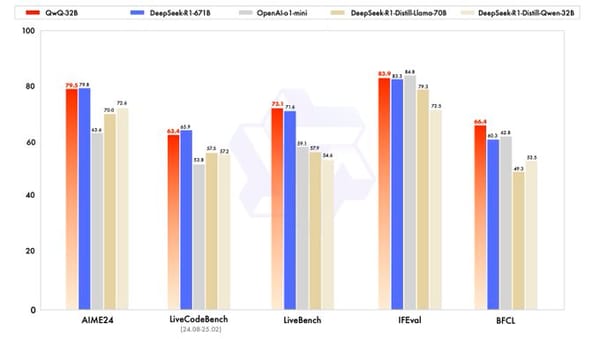

- One of the most remarkable achievements of Tülu 3 405B is its ability to surpass the performance of DeepSeek-V3, a well-known model in the AI community. This is a testament to the effectiveness of the RLVR framework, which allows the model to learn from verifiable rewards, leading to more accurate and reliable outcomes.

- The performance of Tülu 3 405B is on par with GPT-4o, one of the most advanced AI models in the world. This is a significant milestone for open-source AI, as it demonstrates that open-source models can compete with proprietary ones in terms of performance and scalability.

2. Reinforcement Learning from Verifiable Rewards (RLVR): The Secret Sauce

- The RLVR framework is at the core of Tülu 3 405B's success. This innovative approach to reinforcement learning allows the model to learn from rewards that are verifiable, ensuring that the learning process is both efficient and effective. The RLVR framework has been particularly effective at larger scales, as evidenced by the significant improvements in MATH performance at the 405B scale compared to smaller models like 70B and 8B.

- The findings from the DeepSeek-R1 report have been corroborated by the performance of Tülu 3 405B. The report suggested that RLVR has a more pronounced impact on larger models, and this has been clearly demonstrated by the Tülu 3 405B's performance. The model's ability to handle complex tasks like MATH with greater accuracy is a direct result of the RLVR framework.

- The scalability of the RLVR framework is one of its most promising aspects. As AI models continue to grow in size and complexity, the need for efficient and effective training methods becomes increasingly important. The RLVR framework addresses this need by providing a scalable solution that can be applied to models of varying sizes, from 8B to 405B and beyond.

3. Tülu 3 405B vs. Other Open-Weight Models: A Comparative Analysis

- When compared to other open-weight models like Llama 3.1, Tülu 3 405B stands out for its superior performance. The model's ability to surpass Llama 3.1 in various benchmarks, particularly in complex tasks like MATH, highlights the effectiveness of the RLVR framework. This is a significant achievement, as Llama 3.1 is a well-regarded model in the open-source AI community.

- The performance gap between Tülu 3 405B and other open-weight models becomes even more apparent at larger scales. The RLVR framework's ability to improve performance significantly at the 405B scale is a key factor in this. This suggests that as models continue to grow in size, the advantages of using RLVR will become even more pronounced.

- The success of Tülu 3 405B also raises important questions about the future of open-source AI. If open-source models like Tülu 3 405B can match or even surpass the performance of proprietary models like GPT-4o, what does this mean for the AI industry? The implications are profound, as it suggests that open-source AI could play a more dominant role in the future, potentially democratizing access to advanced AI technologies.