QwQ-32B: The Open-Source Reasoning Revolution - A Deep Dive into Alibaba's New AI Model

In the ever-accelerating realm of artificial intelligence, reasoning models are rapidly becoming the next major battleground. These sophisticated systems are pushing the boundaries of what machines can achieve, enabling them to tackle increasingly complex problems with human-like logic and understanding. On March 6, 2025, the Qwen Team at Alibaba made waves with the unveiling of QwQ-32B, a truly innovative 32-billion-parameter reasoning model. Designed to excel in advanced tasks spanning mathematics, coding, and logical deduction, QwQ-32B represents a significant leap forward in the development of open-source AI.

Today, we release QwQ-32B, our new reasoning model with only 32 billion parameters that rivals cutting-edge reasoning model, e.g., DeepSeek-R1.

— Qwen (@Alibaba_Qwen) March 5, 2025

Blog: https://t.co/zCgACNdodj

HF: https://t.co/pfjZygOiyQ

ModelScope: https://t.co/hcfOD8wSLa

Demo: https://t.co/DxWPzAg6g8

Qwen Chat:… pic.twitter.com/kfvbNgNucW

This release isn't just another model; it's a bold statement. QwQ-32B offers a competitive, and freely available, alternative to the closed-source offerings of industry leaders like OpenAI’s o1 series and DeepSeek’s R1. With its impressive performance metrics, an innovative training methodology rooted in reinforcement learning, and its commitment to open accessibility, QwQ-32B is poised to reshape the entire AI reasoning domain.

This comprehensive article will delve deep into the core of QwQ-32B, exploring its unique capabilities, dissecting its architecture, highlighting its strengths, acknowledging its limitations, and analyzing its broader implications for the global AI community. Prepare to discover how this groundbreaking model is changing the game.

The Dawn of Reasoning Models: Beyond Text Generation

Reasoning models are the future of AI. They go beyond simple text generation, enabling machines to think, analyze, and solve complex problems like never before.

Reasoning models represent a fundamental paradigm shift in artificial intelligence. Traditional language models, such as the ubiquitous GPT series, primarily focus on generating text that mimics human language. While these models excel at tasks like writing articles, translating languages, and answering questions based on existing knowledge, they often falter when confronted with problems requiring deep analytical thinking, multi-step logic, or creative problem-solving.

Consider the challenge of solving intricate mathematical problems or writing complex code. These tasks demand more than just rote memorization or pattern recognition; they require the ability to understand underlying principles, apply logical reasoning, and explore multiple potential solutions. This is where reasoning models like QwQ-32B come into their own.

QwQ-32B directly addresses these shortcomings by prioritizing reasoning over simple prediction. It embodies a philosophical approach that encourages the model to question assumptions, explore multiple pathways to arrive at solutions, and engage in a form of self-dialogue to refine its thinking. This focus on reasoning allows QwQ-32B to tackle tasks that are simply beyond the reach of traditional language models.

Alibaba's Qwen Team: Building on a Strong Foundation

QwQ-32B is the latest triumph from Alibaba's Qwen Team, building on the success of Qwen2.5-32B with significant advancements in reinforcement learning.

Developed by the renowned Qwen Team at Alibaba, QwQ-32B is not born in a vacuum. It builds upon the solid foundation of its predecessor, Qwen2.5-32B, leveraging the existing architecture and knowledge base. However, QwQ-32B introduces significant advancements, most notably through the incorporation of reinforcement learning (RL) techniques and a sharpened focus on domain-specific reasoning.

The Qwen Team recognized that simply scaling up the size of a model wasn't enough to achieve true reasoning capabilities. They needed to develop a new training methodology that would allow the model to learn how to think, not just how to generate text. This led to the adoption of reinforcement learning, where the model is rewarded for producing correct answers and penalized for making mistakes.

Furthermore, QwQ-32B is released under the permissive Apache 2.0 license, a critical decision that underscores Alibaba's commitment to open-source AI. This license allows researchers and developers worldwide to freely access, experiment with, modify, and distribute the model. QwQ-32B is readily available on popular platforms like Hugging Face and ModelScope, inviting the global AI community to contribute to its ongoing development and refinement.

Decoding the Architecture: A Deep Dive into the Inner Workings

QwQ-32B's architecture is a marvel of engineering. It combines proven techniques with innovative approaches to achieve exceptional reasoning performance.

QwQ-32B is a dense decoder model boasting an impressive 32.5 billion parameters. Its foundation lies in the transformer architecture, a proven design that has revolutionized the field of natural language processing. However, the Qwen Team has made several key optimizations to the transformer architecture specifically tailored for reasoning tasks. These include:

- Rotary Positional Embedding (RoPE): RoPE enhances the model's ability to efficiently process sequential data, a crucial requirement for reasoning tasks that often involve analyzing long chains of logical steps.

- SwiGLU Activation Functions: SwiGLU activation functions improve the model's learning capacity and overall performance in complex computations. This allows QwQ-32B to handle the intricate mathematical and logical operations involved in reasoning.

- RMSNorm: RMSNorm stabilizes the training process and boosts efficiency, ensuring that the model converges to a high-performing state more quickly and reliably.

- Attention QKV Bias: Attention QKV Bias refines the attention mechanisms within the transformer architecture, enabling the model to better focus on the most relevant information when processing complex inputs.

- 64 Layers and 40 Attention Heads: The sheer depth of the model, with 64 layers and 40 attention heads, provides the necessary capacity for intricate multi-step reasoning. Each layer and attention head contributes to the model's ability to analyze and understand complex relationships within the data.

- 32,768-Token Context Length: This allows the model to handle extremely lengthy inputs, making it ideally suited for tasks that require extended context, such as analyzing long documents or debugging complex codebases.

However, the standout feature of QwQ-32B is its innovative use of reinforcement learning during the training process. Unlike traditional pretraining, which relies heavily on static datasets, reinforcement learning enables the model to dynamically adapt and refine its outputs based on outcome-based rewards. This approach, rigorously validated through accuracy checks and code execution tests, significantly enhances the model's ability to generalize across diverse tasks, particularly in the challenging domains of mathematics and programming.

Benchmark Domination: Proving Its Mettle

QwQ-32B isn't just hype; it's backed by impressive benchmark results. It's outperforming the competition in key areas like math and coding.

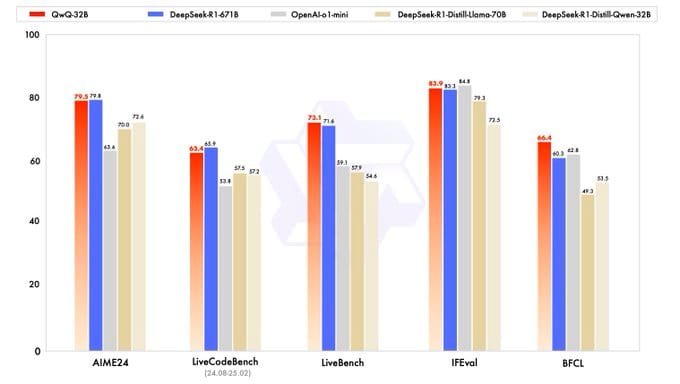

QwQ-32B has been subjected to rigorous testing across a range of industry-standard benchmarks, and the results speak for themselves. Its performance consistently demonstrates a competitive edge, positioning it as a leader in the open-source reasoning model landscape. Let's examine some key benchmarks:

- MATH-500: QwQ-32B achieves an exceptional score of 90.6% on MATH-500, a benchmark designed to assess a model's ability to solve a wide range of mathematical problems. This remarkable performance matches or exceeds that of models like OpenAI’s o1-mini (90.0%) and significantly surpasses Claude 3.5 Sonnet (78.3%).

- AIME (American Invitational Mathematics Examination): With a score of 50.0% on the notoriously difficult AIME, QwQ-32B outperforms Claude 3.5 (16.0%) and holds its own against other top-tier models, highlighting its exceptional strength in advanced mathematics.

- GPQA (Graduate-Level Google-Proof Q&A): A score of 65.2% on GPQA reflects QwQ-32B's robust scientific reasoning capabilities. This score is comparable to Claude 3.5 (65.0%), demonstrating its ability to understand and answer complex questions requiring in-depth scientific knowledge.

- LiveCodeBench: Scoring 50.0% on LiveCodeBench, QwQ-32B proves its mettle in real-world programming scenarios. This benchmark assesses a model's ability to generate functional code for practical applications, and QwQ-32B's performance rivals that of many proprietary models.

These impressive benchmark results underscore QwQ-32B’s ability to excel in technical domains that demand deep reasoning, positioning it as a clear leader among open-source models and a strong contender against closed-source giants.

To further illustrate QwQ-32B's competitive performance, consider the following comparison table:

| Benchmark | QwQ-32B | OpenAI o1-mini | Claude 3.5 Sonnet |

|---|---|---|---|

| MATH-500 | 90.6% | 90.0% | 78.3% |

| AIME | 50.0% | N/A | 16.0% |

| GPQA | 65.2% | N/A | 65.0% |

| LiveCodeBench | 50.0% | N/A | N/A |

Strengths Unveiled: What Makes QwQ-32B Shine

QwQ-32B's strengths lie in its ability to think critically, solve complex problems, and provide transparent explanations. It's a powerful tool for education, research, and real-world applications.

QwQ-32B's core strengths lie in its ability to tackle complex, multi-step problems with a reflective and deliberate approach. Unlike many models that rush to provide answers, QwQ-32B engages in a process of self-dialogue, questioning its assumptions, exploring alternative solutions, and carefully evaluating the evidence. This contemplative process, which mimics the way a student reasons through a difficult problem, significantly enhances its accuracy and reliability.

Here's a closer look at some of QwQ-32B's key strengths:

- Mathematical Prowess: Its exceptional 90.6% score on MATH-500 highlights its capacity to handle diverse and challenging math problems, ranging from basic algebra to advanced number theory.

- Programming Proficiency: A solid 50.0% score on LiveCodeBench demonstrates its ability to generate functional code for real-world applications, making it a valuable tool for software developers.

- Transparent Reasoning: The model provides detailed, step-by-step explanations of its reasoning process, making it invaluable for educational and analytical purposes. Users can understand not just the answer, but also how the model arrived at that answer.

- Open Accessibility: As an open-source model available on platforms like Hugging Face, QwQ-32B empowers the global research community to build upon its foundation, fostering innovation and collaboration.

Enthusiasm for QwQ-32B's capabilities has been palpable since its release. Posts on X (formerly Twitter) from March 6, 2025, reflect widespread excitement, with users noting its ability to rival DeepSeek-R1 and its potential to outshine even larger mixture-of-experts (MoE) models through continuous reinforcement learning training.

Acknowledging Limitations: Areas for Improvement

QwQ-32B is a powerful model, but it's not perfect. The Qwen Team openly acknowledges its limitations, including issues with language mixing and common sense reasoning.

Despite its impressive performance, QwQ-32B is not without its limitations. The Qwen Team has been commendably transparent in acknowledging these shortcomings, emphasizing that QwQ-32B remains a work in progress, an "preview" version open to refinement by the community. Some key limitations include:

- Language Mixing and Code-Switching: The model may unexpectedly switch languages or insert code snippets into its responses, reducing clarity and coherence, particularly in multilingual contexts.

- Recursive Reasoning Loops: It can occasionally get trapped in circular reasoning patterns, leading to lengthy and unproductive outputs that fail to reach a definitive conclusion.

- Common Sense Reasoning: While excelling in technical tasks that require specialized knowledge, QwQ-32B lags in areas that demand nuanced language understanding or everyday common sense reasoning.

- Safety Concerns: As an experimental model, QwQ-32B lacks fully developed safety measures to prevent the generation of harmful or biased content. This necessitates caution when deploying the model in sensitive applications.

These limitations underscore the fact that QwQ-32B, while a powerful tool, is not a finished product. It requires further development and refinement to address these issues and ensure its responsible use.

Implications for the AI Landscape: A Disruptive Force

QwQ-32B is a game-changer for the AI industry. Its open-source nature challenges the dominance of closed-source models and democratizes access to cutting-edge technology.

The release of QwQ-32B has far-reaching implications for the entire AI ecosystem. By offering a high-performing reasoning model under an open-source license, Alibaba is directly challenging the dominance of closed-source systems from companies like OpenAI and Anthropic. This move echoes the disruptive impact of Linux on proprietary operating systems, suggesting that open-source AI could democratize access to cutting-edge technology and foster innovation across the globe.

For businesses, QwQ-32B opens up a wealth of new possibilities in areas such as automation, decision-making, and technical problem-solving. Its permissive licensing allows for commercial use, enabling enterprises to integrate the model into their existing workflows without incurring the high costs associated with proprietary APIs.

Researchers also stand to benefit significantly from QwQ-32B's transparency and accessibility. They can use the model as a foundation for exploring new architectures, developing innovative training techniques, and pushing the boundaries of what's possible with reasoning AI.

Furthermore, QwQ-32B's emphasis on reinforcement learning signals a broader shift in AI development. As traditional scaling laws—where performance improves simply by increasing the amount of data and compute used to train a model—begin to show diminishing returns, alternative approaches like test-time compute and RL-based training are gaining traction. QwQ-32B’s success with a relatively modest 32 billion parameters suggests that efficiency and targeted training may ultimately outpace brute-force scaling in the race for advanced reasoning capabilities.

Looking Ahead: The Future is Open-Source

QwQ-32B is a major step towards artificial general intelligence. Its open-source nature fosters collaboration and accelerates innovation in the field of AI.

QwQ-32B represents a bold step forward in the ongoing quest for artificial general intelligence (AGI). Its ability to compete with larger, closed-source models while remaining accessible to the public underscores the power of community-driven innovation. The Qwen Team has expressed its unwavering commitment to ongoing development, actively soliciting feedback from the community to address the model's limitations and further enhance its capabilities.

As of March 6, 2025, QwQ-32B is readily available for download and experimentation via Hugging Face, ModelScope, and Qwen Chat. The Qwen Team has also published a detailed blog post outlining the model's creation process, providing valuable insights for researchers and developers.

For developers, educators, and businesses alike, QwQ-32B offers a versatile tool for exploring the frontiers of reasoning AI. Whether you're solving a complex equation, debugging a sprawling codebase, or powering the next generation of intelligent systems, QwQ-32B stands as a powerful testament to the transformative potential of open-source collaboration in shaping the future of artificial intelligence.

In a world where reasoning is rapidly emerging as the next great challenge for machines, QwQ-32B is more than just a model; it's a call to action for the global AI community to reflect, question, and build together.

Q&A: Your Questions About QwQ-32B Answered

Excerpt: Curious about QwQ-32B? We've compiled a list of frequently asked questions to help you understand this groundbreaking AI model.

Q: What is QwQ-32B?

A: QwQ-32B is a 32-billion-parameter reasoning model developed by Alibaba's Qwen Team. It excels in tasks requiring mathematical, coding, and logical deduction skills.

Q: Is QwQ-32B open-source?

A: Yes, QwQ-32B is released under the Apache 2.0 license, making it freely available for research and commercial use.

Q: How does QwQ-32B compare to other AI models?

A: QwQ-32B is competitive with closed-source models like OpenAI's o1 series and DeepSeek's R1, particularly in tasks requiring reasoning abilities.

Q: What are the key strengths of QwQ-32B?

A: Its strengths include mathematical prowess, programming proficiency, transparent reasoning, and open accessibility.

Q: What are the limitations of QwQ-32B?

A: Limitations include language mixing, recursive reasoning loops, common sense reasoning deficits, and safety concerns.

Q: How was QwQ-32B trained?

A: QwQ-32B was trained using a combination of traditional pretraining and reinforcement learning techniques.

Q: Where can I download QwQ-32B?

A: QwQ-32B is available for download on platforms like Hugging Face and ModelScope.

Q: What license is QwQ-32B released under?

A: It's released under the Apache 2.0 license.

Q: What are the system requirements for running QwQ-32B?

A: Running QwQ-32B requires significant computational resources, including a high-end GPU with ample memory.

Q: Can I use QwQ-32B for commercial purposes?

A: Yes, the Apache 2.0 license allows for commercial use of QwQ-32B.